ddrueding

Fixture

Parts arrived today, so I started the build. So far I'm about 6 hours into assembly.

AMD 9950X3D, delidded by Thermal Grizzly with a 2 year warranty

Thermal Grizzly Direct Die Waterblock

ASRock X870E Taichi Lite DOA, replaced with ASUS PRIME X870-P WIFI

96GB Corsair Vengeance DDR5 6000

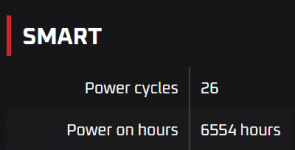

1TB Crucial T705 Gen5 SSD

MSI 4090 Suprim with Alphacool Waterblock

Seasonic Prime 1200 PSU

Silverstone RM52 6U Rackmount chassis

2x Alphacool 360 Radiators

2x Alphacool 120 Reservoirs

2x Alphacool D5 clone pumps

7x Noctua NF-A12x25 PWM Fans

Dynamat

It has been a while since I've done an over-the-top build, and I couldn't help myself. The plan is for separate water cooling loops for the CPU and GPU, allowing them to settle at different temps, and make future changes easier. This is probably 3x the cost of a system that would perform within 5%, but it should be very quiet and satisfying. Not going for looks, just performance and cool factor (in my eyes at least).

I picked this case for the front IO and that I could fit the radiators in it. The rest of the features I stripped out as they aren't needed for the build.

Getting vibration treatment on the chassis first was important, as this chassis is just steel and vibrated all over. I added it in chunks to work around the protrusions on the panels until tapping or banging it anywhere just made a "thud". This did involve sticking some panels together, but they aren't ones needed for service.

Unfortunately, Alphacool seems to have forgotten one of the plugs on each of the reservoirs. I've put in a service ticket and hope to have them in soon. In the meantime I may use the parts I have to make the CPU loop work and run the GPU aircooled. Currently pressure testing the CPU loop overnight.

AMD 9950X3D, delidded by Thermal Grizzly with a 2 year warranty

Thermal Grizzly Direct Die Waterblock

96GB Corsair Vengeance DDR5 6000

1TB Crucial T705 Gen5 SSD

MSI 4090 Suprim with Alphacool Waterblock

Seasonic Prime 1200 PSU

Silverstone RM52 6U Rackmount chassis

2x Alphacool 360 Radiators

2x Alphacool 120 Reservoirs

2x Alphacool D5 clone pumps

7x Noctua NF-A12x25 PWM Fans

Dynamat

It has been a while since I've done an over-the-top build, and I couldn't help myself. The plan is for separate water cooling loops for the CPU and GPU, allowing them to settle at different temps, and make future changes easier. This is probably 3x the cost of a system that would perform within 5%, but it should be very quiet and satisfying. Not going for looks, just performance and cool factor (in my eyes at least).

I picked this case for the front IO and that I could fit the radiators in it. The rest of the features I stripped out as they aren't needed for the build.

Getting vibration treatment on the chassis first was important, as this chassis is just steel and vibrated all over. I added it in chunks to work around the protrusions on the panels until tapping or banging it anywhere just made a "thud". This did involve sticking some panels together, but they aren't ones needed for service.

Unfortunately, Alphacool seems to have forgotten one of the plugs on each of the reservoirs. I've put in a service ticket and hope to have them in soon. In the meantime I may use the parts I have to make the CPU loop work and run the GPU aircooled. Currently pressure testing the CPU loop overnight.

Attachments

Last edited: