It's more likely supply chain issues due to pandemic-related stuff. We are seeing it at work with buying hardware and lead times are being noted at 10-12 weeks out right now, mostly for SSDs but also for raid controller chips and some network controller chips. :-/

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Home NAS

- Thread starter LiamC

- Start date

Mercutio

Fatwah on Western Digital

'Tis the season to buy stupid crap.

I just ran across this nVMe to 5 port SATA adapter on Amazon. It features everyone's favorite JMicron bridge controller, but it's a potential solution for people trying to figure out how to cram some extra SATA ports on to a contemporary SFF build. Amazon reviews suggest the bridge IC needs active cooling. I don't need such a thing but I'm also glad they're out there to be had.

This little $100 card supports 4x U.2 on an 8 lane PCIe card without needing bifurcation, which basically no Ryzen motherboard supports. If you're running that on desktop Ryzen, I guess you might be bottlenecking your GTX 3080 by using one of those, but I doubt we're going to see U.2 drive connectors on desktop boards anyway. There's also a slightly more expensive version for M.2. They both use the same PCIe bridge chip and they're supposed to be driver and configuration-free.

I just ran across this nVMe to 5 port SATA adapter on Amazon. It features everyone's favorite JMicron bridge controller, but it's a potential solution for people trying to figure out how to cram some extra SATA ports on to a contemporary SFF build. Amazon reviews suggest the bridge IC needs active cooling. I don't need such a thing but I'm also glad they're out there to be had.

This little $100 card supports 4x U.2 on an 8 lane PCIe card without needing bifurcation, which basically no Ryzen motherboard supports. If you're running that on desktop Ryzen, I guess you might be bottlenecking your GTX 3080 by using one of those, but I doubt we're going to see U.2 drive connectors on desktop boards anyway. There's also a slightly more expensive version for M.2. They both use the same PCIe bridge chip and they're supposed to be driver and configuration-free.

Mercutio

Fatwah on Western Digital

Seagate SMR drives seem to behave in RAIDz1. I use 4MB block sizes since all the data I'm copying is video, and relatively small 4-drive arrays (two 15TB arrays and two 18TB). Everything is fine, if not particularly fast. Writes are averaging about 75MB/sec/array. I've been feeding data into this system for about three weeks. I frankly expected it to fail by now and it hasn't, which makes me need to rethink how I want to allocate the disks I have available.

Everything is running to a basic Ryzen 2400G with 64GB RAM and a LSI SAS controller. It's stuck on 2.5GbE, but the network interface doesn't seem to bottleneck disk transfers as much as the SMRness of the drives. I'm just using TrueNAS at the moment.

The *is* a working ZFS port for Windows. Performance numbers, while not as good, are getting in the right ballpark.

Everything is running to a basic Ryzen 2400G with 64GB RAM and a LSI SAS controller. It's stuck on 2.5GbE, but the network interface doesn't seem to bottleneck disk transfers as much as the SMRness of the drives. I'm just using TrueNAS at the moment.

The *is* a working ZFS port for Windows. Performance numbers, while not as good, are getting in the right ballpark.

Last edited:

I was hoping NAS would get better, but the realty is that Synology has screwed over the consumers by making us buy only the "Synology" branded Toshiba drives at stupidly high prices, e.g., over $600 for 16TB.

Meanwhile QNAP has gone overboard with all the extra (and useless) 2.5" bays, so a 12-bay NAS only has 8 usable bays and a 16-bay NAS has 12 usable bays, but the latter is huge and pricey.

Of the 8-slotters with decent CPU the Synology 1821+ and QNAP TS-873A are both rather old already and the 873A doesn't support external expansions other than the crappy portomultipliers on USB. The Synology 21 series (rather than 22 series) works fully with all drives if we trust they won't zap it with a nasty Toshiba-izing FW.

Are there other NAS brands/models worthy of consideration? I'm not interested in building a homemade NAS for several reasons and especially because it should be compact and portable to manually transport and ship around.

Meanwhile QNAP has gone overboard with all the extra (and useless) 2.5" bays, so a 12-bay NAS only has 8 usable bays and a 16-bay NAS has 12 usable bays, but the latter is huge and pricey.

Of the 8-slotters with decent CPU the Synology 1821+ and QNAP TS-873A are both rather old already and the 873A doesn't support external expansions other than the crappy portomultipliers on USB. The Synology 21 series (rather than 22 series) works fully with all drives if we trust they won't zap it with a nasty Toshiba-izing FW.

Are there other NAS brands/models worthy of consideration? I'm not interested in building a homemade NAS for several reasons and especially because it should be compact and portable to manually transport and ship around.

Have you explored the TrueNas (FreeNas) turnkey solutions?

www.truenas.com

www.truenas.com

Asustor is another brand you could look into. They did just recently get hit with a bad exploit (Deadbolt ransomware)

www.asustor.com

www.asustor.com

TrueNAS Mini | Enterprise-grade Storage for the Home or Office

Small but mighty, the TrueNAS Mini brings the tools used by storage professionals to your home or office, so you can manage your data like a pro.

Asustor is another brand you could look into. They did just recently get hit with a bad exploit (Deadbolt ransomware)

ASUSTOR was established as a subsidiary of ASUS and is a leading innovator and provider of network attached storage (NAS). ASUSTOR specializes in the development and integration of storage, backup, multimedia, video surveillance and mobile applicatio

ASUSTOR was established as a subsidiary of ASUS and is a leading innovator and provider of network attached storage (NAS). ASUSTOR specializes in the development and integration of storage, backup, multimedia, video surveillance and mobile applications for home and enterprise users.

Interesting. I don't really understand the ransom software. Does it enter through Windows connection to the PC by SMB or does an afflicted file have to have been copied over to the NAS and somehow execute iself there? I never connect the NAS to the internet as of course that would be silly risky.

After reading all about the AsusTor I think they missed the boat on a couple of items. Most importantly, the 4-cored Atom CPU is rather wimpy and of lesser concern is that there are no PCIe slots for upgrades. The TrueNAS has the same 4-cored CPU in the base models, but the 8-cored Atom CPU in the better 5-bay and the 8-bay NAS. The Synology and QNAP have the AMD Ryzen V1500B. I cannot find many benchmarks, but Greekbench (in Synology) indicates the 4-cored Atom C3538 performs at about half of the V1500B Ryzen in the QNAP/Synology NAS. I need to delve into the TrueNAS a bit.

Can you explain in more details the requirements you're trying to meet with a portable/small sized NAS and why are you focused on the CPU performance versus storage IO? Are you looking for a NAS that can saturate a 10Gb nic?

I'm thinking of moving my current secondary NAS setup (8+5 drives) to offsite storage (>1200km) and replacing it with a newer 8-bay NAS to be used at home and some other locations (<1000km). Typical file size is 50-100MB, with many 4GB and a few of 1-2TB. Does IOPS matter much for the larger files? I want simple speed to copy about 1-2TB data back and forth from the NAS to the WIP SSDs on a regular basis.

I was trying to narrow down and understand your concerns about the CPU included in any of these NAS devices to know why you have concerns. I wasn't sure if you were planning to run other apps on the NAS. There isn't a ton of CPU performance needed to get decent IO on a zfs pool (TrueNAS), it would be limited by the performance of your HDDs mainly. Would you be using a 10Gb connection between systems to transfer the 1-2TB of data?

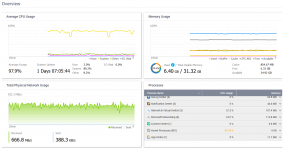

I did a quick test copying a 1TB, 2TB, and 10TB files from my Linux VM (using a Samsung enterprise NVMe SSD) to my zfs NAS using a Samba mount over my 10Gb network link. My NAS uses an older Intel Xeons (E5-2670 @ 2.60GHz) and 6TB 7200RPM NAS drives in my NAS from 7+ years ago to give you some rough ideas of what you might expect.

From SSD to NAS:

I did a quick test copying a 1TB, 2TB, and 10TB files from my Linux VM (using a Samsung enterprise NVMe SSD) to my zfs NAS using a Samba mount over my 10Gb network link. My NAS uses an older Intel Xeons (E5-2670 @ 2.60GHz) and 6TB 7200RPM NAS drives in my NAS from 7+ years ago to give you some rough ideas of what you might expect.

From SSD to NAS:

- 2.9 sec for the 1TB (353MB/sec)

- 5.3 sec for the 2TB file (386MB/sec)

- 19.6 sec for the 10TB (534MB/sec)

- 1.7 sec for 1TB (602MB/sec)

- 2.8 sec for 2TB (731MB/sec)

- 17 sec for 10TB (616MB/sec)

I'm getting 800-1000MB/sec. continuous writes on the Xenon NAS and only ~570 continuous writes on the Excavation NAS with RX-421ND. That is the minimum I could accept. Supposedly the V1500B is better than RX-421, but IDK how much.

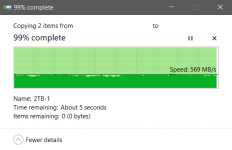

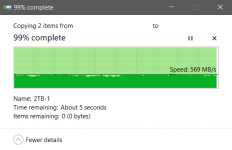

This is a 2GB copy from the main NAS (Xenon) to the backup (AMD) NAS.

This is a 2GB copy from the main NAS (Xenon) to the backup (AMD) NAS.

I think you used GB rather than TB, but the point is the same. I now have some of the X18 drives to maximize capacity. Howewever, I am still undecided about the new NAS and am testing the drives in old NASes. What are the thoughts on the barfts vs. the EXT4 file systems? The comparison at Synology states that EXT4 has "less hardware requirements," but it is not clear if EXT4 is always much faster inherently or if file system does not matter much if the CPU is powerful enough?

From the specs it looks that the QNAP is entirely different in the FS implementation. Instead of the Synology brfts and EXT4 they have the ZFS and EXT4. However, QNAP requires a whole new OS installation (Hero edition) to use the ZFS compared to the Synology that allows each volume to be created with either file system. Assuming the same CPUs, which of the newfangled FS performs better, the QNAP FS or the Synology brfts?

I've not done any performance comparisons with btrfs vs qnap to be able to compare. Between ext4, btrfs, and zfs, I'd trust btrfs the least given its relative younger age (less proven) when it comes to checking integrity and self healing confidence. When compared to zfs, I'd rather trust my data on a zfs filesystem with it's proven checksum capabilities and bit rot protection versus btrfs. My requirements might be different than yours though and also zfs isn't a perfect child either, it does have some issues too. I'd likely even pick ext4 or even xfs over btrfs.

I installed the QuTS Heros (ZFS), which was rather a PITA process. For some reason the reads are slower than EXT 4, though writes about the same. The ZFS Pools are the storage with no Volume like the EXT4.  On the QNAP it is impossible to change the ZFS array after creation, which seems like a bad idea.

On the QNAP it is impossible to change the ZFS array after creation, which seems like a bad idea.  I will completely reformulate when the last three drives arrive. I cannot decide whether to go back to the EXT4.

I will completely reformulate when the last three drives arrive. I cannot decide whether to go back to the EXT4.

What configuration did you use when you built your zpool? How many drives, parity protection, etc?

ZFS can be used as a native pool which is what I use or you can create and carve out zvols from the pool space and actually put any filesystem you want on it as a block device. From there you can even snapshot them, etc for more granular protection.

Not sure why qnap makes it impossible to change after creating it but there are some restrictions when planning out a pool that are best to consider in advance for optimal performance. There are other options such as adding an L2ARC via an SSD to your zpool to cache reads to offer better performance.

ZFS can be used as a native pool which is what I use or you can create and carve out zvols from the pool space and actually put any filesystem you want on it as a block device. From there you can even snapshot them, etc for more granular protection.

Not sure why qnap makes it impossible to change after creating it but there are some restrictions when planning out a pool that are best to consider in advance for optimal performance. There are other options such as adding an L2ARC via an SSD to your zpool to cache reads to offer better performance.

At first it was 5 EXOS in RAID 5, but we will do 8 EXOS in RAID 6. Performance doesn't scale much from 4 to 5 drives, so I'm not expecting any significant improvement at eight. I keep playing around with the settings and the benchmarks don't correlate so well to the actual copy speeds. Some settings apply at the folder level or whatever that unit is maps to a drive. I am using the thick setting with 32K blockes, as it tests with the best speeds. I am not using the file depuplications - that is more for servers if I understand right. The total capacity is a few TB less with the ZFS than the EXT4. I tried using SSD caching a few years ago and it didn't help my use case. I only needed caching on writes, not reads.

The other thing I noticed is that the new EXOS drives are much noiser in seeks than the older 10TB Seagate drives although they are both from the supposedly same Enterprise class with helium. One of my 10TB Seagates is actually labeled EXOS but it has the same part number as the older ones.

The other thing I noticed is that the new EXOS drives are much noiser in seeks than the older 10TB Seagate drives although they are both from the supposedly same Enterprise class with helium. One of my 10TB Seagates is actually labeled EXOS but it has the same part number as the older ones.

I'd never recommend dedup with zfs, it's a terrible option and needs tons of RAM with minimal benefit. Compression however is worth the minimal increased CPU usage.

zfs will use a bit more space for parity and meta data and also the 32-bits for 256 checksums for bit rot integrity protection that EXT4 does not have. In the event of corruption, a zfs scrub will automatically repair it if the checksum doesn't match.

When you create the pool, do you have the option to change the ashift value to align the drives?

zpool create -o ashift=12 <etc> for more performance but less space efficiency or ashift=9 for more space efficiency but less performance.

Then enable compression: zfs set compression=lz4 <pool name>

If you want more write performance you would need to configure your pool with more vdevs. This is what I did with my setup but it has a bit more overhead for parity as a tradeoff. This is similar to raid 60. I did 2 x 10-disk raidz2 in my pool

zfs will use a bit more space for parity and meta data and also the 32-bits for 256 checksums for bit rot integrity protection that EXT4 does not have. In the event of corruption, a zfs scrub will automatically repair it if the checksum doesn't match.

When you create the pool, do you have the option to change the ashift value to align the drives?

zpool create -o ashift=12 <etc> for more performance but less space efficiency or ashift=9 for more space efficiency but less performance.

Then enable compression: zfs set compression=lz4 <pool name>

If you want more write performance you would need to configure your pool with more vdevs. This is what I did with my setup but it has a bit more overhead for parity as a tradeoff. This is similar to raid 60. I did 2 x 10-disk raidz2 in my pool

Code:

zpool status

pool: naspool_01

state: ONLINE

scan: scrub repaired 0B in 0 days 07:18:56 with 0 errors on Sun Mar 13 08:42:58 2022

config:

NAME STATE READ WRITE CKSUM

naspool_01 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

sdu ONLINE 0 0 0

sdt ONLINE 0 0 0

sdm ONLINE 0 0 0

sdq ONLINE 0 0 0

sdg ONLINE 0 0 0

sds ONLINE 0 0 0

sdp ONLINE 0 0 0

sdf ONLINE 0 0 0

sdh ONLINE 0 0 0

sdo ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

sdl ONLINE 0 0 0

sdk ONLINE 0 0 0

sde ONLINE 0 0 0

sdc ONLINE 0 0 0

sdi ONLINE 0 0 0

sdd ONLINE 0 0 0

sdj ONLINE 0 0 0

sdn ONLINE 0 0 0

sdb ONLINE 0 0 0

sdr ONLINE 0 0 0I've decided not to use some of the features such as dedups and the snapshots. I have not tested the compressor performance yet, but maybe should if it doesn't create a slowdown. I have not found compressions to be useful in Windows though.

The NAS has 32GB of ECC RAM (replaced basic 8GB regular RAM) which I hope is enough as higher amounts were rather expensive. It only has 8x 3.5' slots (my choice to keep the size/weight under control) so Z2, i.e., 75% efficiency, uses as much parity space as I am willing to lose.

I don't know about the command line stuff. There should be a way to do some of that, but I'm just using the GUI. I supect that some settings are doing that in the background.

I found this article about the Randomware afflicting QNAP and it also mentions some other one that impacted the ASUSTOR NAS.

www.bleepingcomputer.com

I don't think it is an issue if not connected to the internet. I update the software IMG file from a file downloaded separately.

www.bleepingcomputer.com

I don't think it is an issue if not connected to the internet. I update the software IMG file from a file downloaded separately.

The NAS has 32GB of ECC RAM (replaced basic 8GB regular RAM) which I hope is enough as higher amounts were rather expensive. It only has 8x 3.5' slots (my choice to keep the size/weight under control) so Z2, i.e., 75% efficiency, uses as much parity space as I am willing to lose.

I don't know about the command line stuff. There should be a way to do some of that, but I'm just using the GUI. I supect that some settings are doing that in the background.

I found this article about the Randomware afflicting QNAP and it also mentions some other one that impacted the ASUSTOR NAS.

Massive Qlocker ransomware attack uses 7zip to encrypt QNAP devices

A massive ransomware campaign targeting QNAP devices worldwide is underway, and users are finding their files now stored in password-protected 7zip archives.

Mercutio

Fatwah on Western Digital

Very few modern file formats aren't compressed as part of the standard in the first place. You might as well enable FS level file compression for the oddball exceptions, but almost no one is storing plain text or uncompressed TIFFs in quantities that will result in much savings.

I finished copying and subsequently fully verifying the data that I want to keep. Overall I think the NAS works pretty well for the low-powered Ryzen. Over 70% of the cost was the hard drives. I only added the ECC RAM and repurposed an unused dual 10G SFP+ card. I may swap that for a different one eventually. The ZFS and RAID-Z2 give me some confidence that the data is solid. Unfortunately this NAS doesn't talk, probably as a cost savings measure.

I suppose it is some stupid QNAP thing, since my Synololgy NAS units all boot in under 2 minutes. After logging in it takes about a minute to unlock each folder. I'm not sure what goes on during that time - drives are mapped and the root folders are visible immediately though.

I can barely contemplate the amount of data you have in one array. I have more than that in multiple NAS, but it's mostly for redundancy as I figure a whole unit could die and lose my life's work.

I have more than that in multiple NAS, but it's mostly for redundancy as I figure a whole unit could die and lose my life's work.

How do you turn your NAS on and off, just with the switch? I would think that your 20 drives could be loud unless out of the way.

My computer working area is also for WFH, and I'm not liking the noise of the newer EXONOS drives. I can move it to the other side of the room (SFP+ supposedly reaches up to 7m) but no way to easily control it. IIRC they had the WOL function for PCs years ago, but that doesn't seem to work. I could try to find the points on the mainboard for the power, but that's not ideal.

I can barely contemplate the amount of data you have in one array.

How do you turn your NAS on and off, just with the switch? I would think that your 20 drives could be loud unless out of the way.

My computer working area is also for WFH, and I'm not liking the noise of the newer EXONOS drives. I can move it to the other side of the room (SFP+ supposedly reaches up to 7m) but no way to easily control it. IIRC they had the WOL function for PCs years ago, but that doesn't seem to work. I could try to find the points on the mainboard for the power, but that's not ideal.

I have two nas arrays, the second one is a 12-bay that I use as a backup of the larger one. They're connected to each other via my 10Gb switch in the same rack.

My main nas stays on 24x7 but if I need to turn it off, I log in via ssh and shut it down like a normal Linux system. When I need to power it back on, I open the onboard BMC web page and issue a power on.

Everything is in a 24U rack in my basement utility area. The noise isn't great but it's not terrible when I'm in the room. Otherwise I don't hear it in the house because it's far away. I have 4 of those 16TB Exos drives but haven't really heard them under load to know if they're annoying sounding.

If you need a longer cable you may be able to get an sfp+ optical transceiver and an inexpensive om3 fiber cable that should be good up to 300m.

My main nas stays on 24x7 but if I need to turn it off, I log in via ssh and shut it down like a normal Linux system. When I need to power it back on, I open the onboard BMC web page and issue a power on.

Everything is in a 24U rack in my basement utility area. The noise isn't great but it's not terrible when I'm in the room. Otherwise I don't hear it in the house because it's far away. I have 4 of those 16TB Exos drives but haven't really heard them under load to know if they're annoying sounding.

If you need a longer cable you may be able to get an sfp+ optical transceiver and an inexpensive om3 fiber cable that should be good up to 300m.

I suppose there are some advantages to having a true server like you have, since MAS has no BMC.

It turns out that I forgot the WOL does not work with the SFP+ because that is off when the power is off. Only the 2.5GbE (RJ45) ports are able to accept WOL.

It turns out that I forgot the WOL does not work with the SFP+ because that is off when the power is off. Only the 2.5GbE (RJ45) ports are able to accept WOL.

I recall you mentioned the noise factor of your Exos drives. I'm curious if these WDC Ultrastar HC550 would be better?

Perhaps yes, but I did not expect that the seeks would be so loud although some had complained.

I looked at so many drives that I can't recall if the 18GB Ultrastars were available or maybe not 5+ that price. Some sites only allow 1 or 2 at the discount price and then the rest are higher. I've spent a crazy amount this month, so I hope no more storage expenses are needed for a long time.

I looked at so many drives that I can't recall if the 18GB Ultrastars were available or maybe not 5+ that price. Some sites only allow 1 or 2 at the discount price and then the rest are higher. I've spent a crazy amount this month, so I hope no more storage expenses are needed for a long time.

Now I'm debating getting the WD 20TB Ultrastar but I can see they sell a WD gold 20TB that has almost the same specs. The only difference I could find is the Ultrastar supports Self-Encrypting Drive (SED) and the gold does not show it as an option. Everything else looks to be identical. Amazon has the 20TB Gold for $399 direct from amazon so I'm tempted if the warranty will be valid. I can get the Ultrastar from B&H with employee discount for $430 but if the only difference is SED I don't really care enough for that feature.

The 20TB Seagate Exos X20 or IronWolf Pro 20TB is more money right now (from a more-trusted seller). I don't know if those would be a better drive. The WD 20TB show lower peak wattage of 7W versus 9.4W.

The 20TB Seagate Exos X20 or IronWolf Pro 20TB is more money right now (from a more-trusted seller). I don't know if those would be a better drive. The WD 20TB show lower peak wattage of 7W versus 9.4W.

Mercutio

Fatwah on Western Digital

How does one get an employee discount from B&H?

Mercutio

Fatwah on Western Digital

Drobo filed for Chapter 11 this week. I have some Drobo hardware and it's been fine-ish. No complaints in particular but I've always liked Synology better for all the same stuff.