That crytpo mineware crud in the video cards was a nightmare a few years ago to the point where normal users could not find cards in stock. I think nVidia eliminated it in the later phases of the 30 series, but nothing was mentioned in the 40 series.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Video Cards

- Thread starter LunarMist

- Start date

Chewy509

Wotty wot wot.

IIRC, nVidia said no new architecture until 2025. Will look at refreshes of current generation (aka Super's and Super Ti's) and continued focus on AI for 2024...Does this all mean that there are no 50 series GPUs coming in 2024?

Some reports are also indicating the the 50xx series will be a refresh of the current Ada Lovelace GPU (same architecture on a die shrink), rather than a new implementation.

https://www.alliedgamingpc.com.au/news/NVIDIA-Next-Gen-GeForce-RTX-5090-RTX5080-Arrive-in-2025/#:~:text=We%20won't%20see%20a,RTX%202060%20SUPER%20graphics%20cards.

Mercutio

Fatwah on Western Digital

I can't believe there's such a thing as an independent GPU repair specialist, but here's this guy on Youtube explaining the realities of having a fat ass RTX 4090 in horizontal mounting.

Watching a couple of his videos, I have serious respect for a guy willing to de-solder and re-mount a contemporary GPU.

Watching a couple of his videos, I have serious respect for a guy willing to de-solder and re-mount a contemporary GPU.

Mercutio

Fatwah on Western Digital

Many issues would be resolved if we still had viable horizontal desktop cases.

Silverstone still offers traditional desktop cases. The GD11 is a big roomy boy that can hold a 240mm radiator and has room for an RTX 4090. The Grandia series cases have a very normal ATX desktop PC profile, similar to the computers we all using around 1995.

Mercutio

Fatwah on Western Digital

The world of video card leakers seems to think that Intel Battlemage will have 3, 5, 7 and 9-series SKUs, with the most expensive models landing somewhere around RTX 4070 in terms of standard benchmarks, but with 24GB RAM and an MSRP of around $500. That would be just about perfect IMO.

Also, if you happen to live near a Microcenter, this is a pretty crazy deal. 12th gen i5 + mATX motherboard + 16GB DDR4 + A770 for $450.

Also, if you happen to live near a Microcenter, this is a pretty crazy deal. 12th gen i5 + mATX motherboard + 16GB DDR4 + A770 for $450.

Mercutio

Fatwah on Western Digital

For GPUs? Leakers say they're still targeting a TDP of 225W like the A770 have. Arc had tons of driver issues and performance quirks at launch, but these things have largely been addressed and are really of primary concern for gamers rather than regular people.

jtr1962

Storage? I am Storage!

There's one about half a mile from me. You're basically getting the graphics card thrown in for free once you add up the regular prices of all the other stuff. Looking at some data, the A770 can do roughly 20 Tflops single precision. That's about 10 times as much as the integrated GPU on my A10-7870K. And the i5 is of course far better for regular computing tasks. Don't need the extra graphics or compute horsepower, but if I did that's a tempting deal.Also, if you happen to live near a Microcenter, this is a pretty crazy deal. 12th gen i5 + mATX motherboard + 16GB DDR4 + A770 for $450.

sedrosken

Florida Man

For what it's worth, my A770LE is great. If you play a lot of DX11/9 games it can still be a little iffy (but it has come a very very very long way from launch), and for compute if you're not buying nVidia you're just not getting the job done because you don't have CUDA, but for DX12 and encoding? It's awesome.

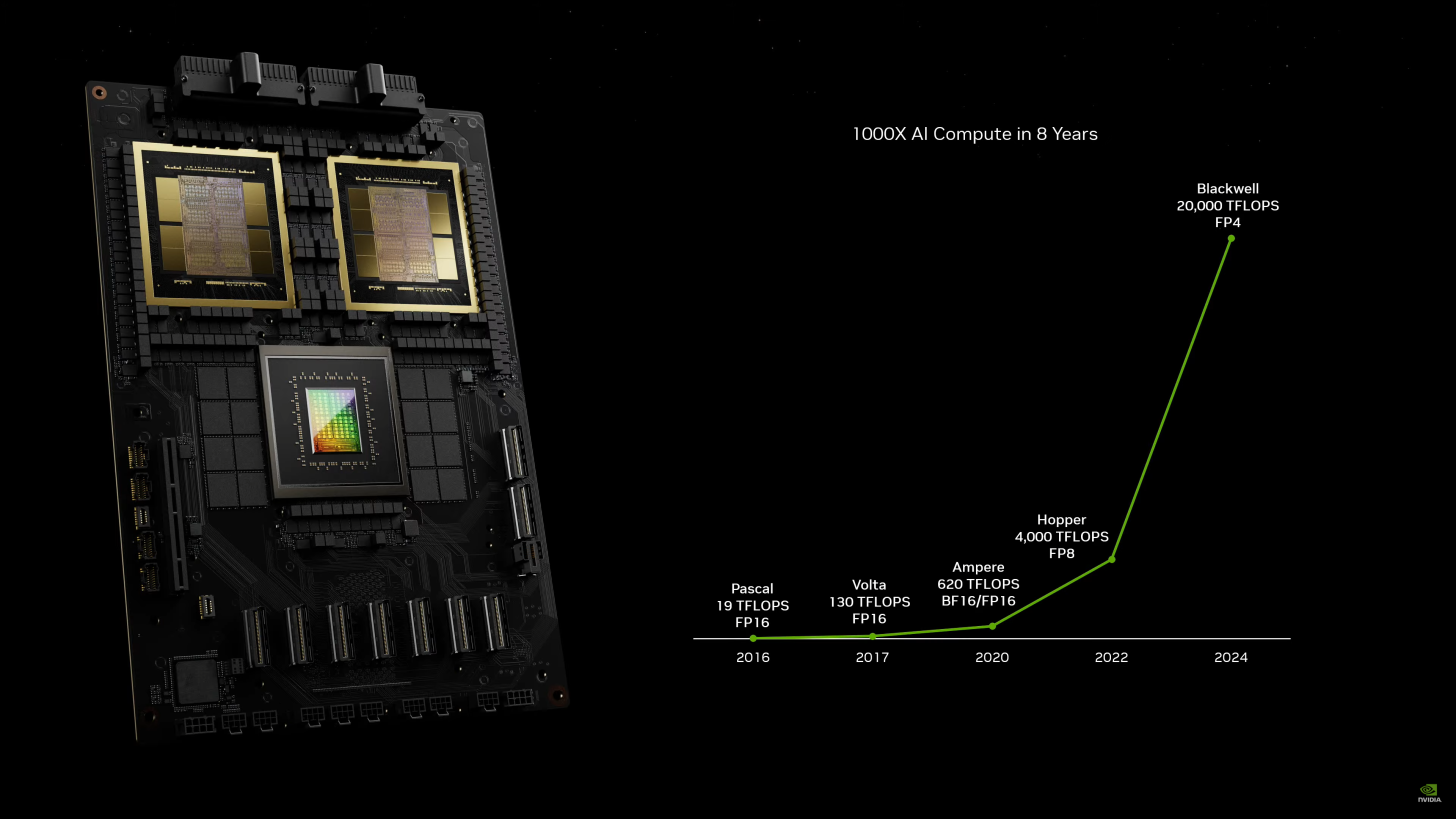

NVIDIA CEO: our next-gen Blackwell GPU will cost $30,000 to $40,000 each

NVIDIA's next-generation Blackwell B200 AI GPUs cost $30,000 to $40,000 each, while the company poured over $10 billion USD into R&D of Blackwell.

You get 8 of the GPUs in a system that only uses 14kW. The GPU memory is 1,440GB total.

Mercutio

Fatwah on Western Digital

The main goal I have is to find the right GPU for content creation acceleration. Probably LM as well. The field in this area is very uneven. Adobe does a lot with Intel Xe cores and RTX, but almost nothing with AMD. I have a theory this is because Apple was using AMD GPUs leading up to the M-silicon switch and Apple wants to make its older products look worse in comparison. In any case, AMD is absolutely king of the hill for my video editing application; an RX 7900 is more capable and helpful for Resolve than an RTX 4090, but Intel graphics offer more hardware encoding support that's specifically helpful for me. Other big name content creation applications take their own approach. Some of them really are nVidia only, but the course isn't as clear as it is for gamers.

jtr1962

Storage? I am Storage!

That pretty much would negate any need for heating my house in the winter.You get 8 of the GPUs in a system that only uses 14kW. The GPU memory is 1,440GB total.

And 20 petaflops? Holy cow! I was impressed with just the few hundred gigaflops today's CPUs can manage.

Last edited:

Mercutio

Fatwah on Western Digital

Are the better MACs inadequate for both those tasks?

I don't care if they are or not. I don't like Apple's architecture. More or less every computer I have ever owned has gotten RAM and storage updates over its useful life, and I definitely prefer to have dedicated GPU memory rather than letting it float on top of system RAM. I'm definitely not spending $5k up front just to have a computer that will still wind up being inadequate in a few years regardless. And I'm definitely DEFINITELY not living on a computer with a half dozen external drives plugged in, which seems to be SOP for every Mac user I know, even when there's a perfectly good file server in the same building.

And, once again, MAC and Mac are two different things.

jtr1962

Storage? I am Storage!

Do Macs still need special hard drives, or can they at least finally take regular commodity HDDs? I remember that being another quirk with them years ago where the pinout on the cable was different, no doubt so you could only buy hard drives from them at grossly inflated prices.

They don't use hard drives, but you can plug in external USB/thunderbowls HDDs or SSDs. They also support SMB through 10GbE, not sure about iscsi. QNAP, etc. Make Thunderbolt's NAS and you can share files between various platforms.

The whole MaC storage model sucks, but that has nothing to do with the video performance.

The whole MaC storage model sucks, but that has nothing to do with the video performance.

Chewy509

Wotty wot wot.

On the current Apple Silicon series stuff, they are custom devices as the actual drive controller is in the SOC and the 'HDD' is just a card with NAND flash and a bridge chip on it.Do Macs still need special hard drives, or can they at least finally take regular commodity HDDs?

You can source upgrades and DIY, but you may need access to some Apple software to reset the drive controller in the SOC for it to work correctly. (Basically to tell the drive controller to rotate the crypto keys and about any size changes).

It's pretty common for MacBook owners to have a Thunderbolt dock and a stack of external SSD/NVMe for additional storage. IIRC there are a few drive enclosures that talk Thunderbolt and let you DAS RAID configurations if you need it.

More info: https://everymac.com/systems/apple/...-upgrade-mac-studio-internal-ssd-storage.html

Mercutio

Fatwah on Western Digital

The whole MaC storage model sucks, but that has nothing to do with the video performance.

Macs aren't better for video. They are well optimized and power efficient for it, but so is a Snapdragon 8 gen 3. I'm sure Apple Silicon is Adobe's primary optimization target as well, and that definitely helps both sides, but for GPU compute workloads, the mainstream M3 Pro is somewhere between 4060Ti and 4070 performance. The balance changes if you look at the biggest M3 (Ultra? I think?) CPUs with the largest amounts of RAM, because the Apple SoC can shift all its RAM over to the GPU workload and probably do that much more work entirely within the SoC.

It's pretty common for MacBook owners to have a Thunderbolt dock and a stack of external SSD/NVMe for additional storage. IIRC there are a few drive enclosures that talk Thunderbolt and let you DAS RAID configurations if you need it.

This is a huge point of annoyance for me, actually. I don't support all that many Macs, but I can't get the Mac people to store their stuff somewhere it's going to get backed up or where they can find it again. Stacks of external drives piss me off.

Mercutio

Fatwah on Western Digital

You were the one that doesn't like the NVIdias for the video processing. So is AMD>NVIdia>MAc or which other order for the video?

It's application specific. For Resolve, AMD is actually preferable to nVidia overall, but even that depends on assumptions in what is being processed and how; I still benefit from Intel because I want to preserve the chroma data from my camera. Adobe does very little on a GPU aside from things like thumbnail displays; from what I've read, everything is CPU bound except some of the AI functions and things like thumbnail generation and actual file exports.

My specific objection to nVidia and AMD in the current generation comes from the fact that they don't bother to offer full hardware Codecs for what are basically all the files that might come out of a contemporary camera. If you want to work with 10 bit Canon files, you more or less have to transcode everything to Rec709 (or ProRes HQ if you are doing an Apple workflow) as a first step. You can process and work from dummy files if you want, but the original video still has to be processed at some point.

Mac and Intel are kind of off to the side in these matters. An M-whatever is a generally a fixed target for optimization, so even if the hardware is in and of itself not as powerful as discrete graphics, it can get more optimization work AND also benefit from allocating more system memory than a typical discrete card might have for at least some workloads. It's hard to do an apples to apples comparison with that. Intel is in somewhat the same boat since Xe graphics cores are found in fairly anemic desktop and notebook CPUs that a lot of systems will be stuck using, so there's a big incentive to optimize using the tools Intel offers.

If you were at a full time job with your own compnay and maybe a couple of assistants at events, which gear would you use for processing videos for the customers?

All I know is that the iNtel Xeres sucks for still image NR processing. I'm wondering if the new 155H with the Arch GPU chip is much better than the 1360P and Xe?

All I know is that the iNtel Xeres sucks for still image NR processing. I'm wondering if the new 155H with the Arch GPU chip is much better than the 1360P and Xe?

Mercutio

Fatwah on Western Digital

If you were at a full time job with your own compnay and maybe a couple of assistants at events, which gear would you use for processing videos for the customers?

If I'm using Resolve Studio, the correct answer right now is either an Intel CPU with an iGPU + a high end Radeon, or a Threadripper with both Arc and Radeon hardware. That's the only video editing application I actually care about. Premier is a crashy mess on Windows for reasons that seem unrelated to graphics hardware, so I pay it no mind.

All I know is that the iNtel Xeres sucks for still image NR processing. I'm wondering if the new 155H with the Arch GPU chip is much better than the 1360P and Xe?

nVidia has CUDA, and a lot of applications seem to get support for their acceleration functions in CUDA before it is implemented elsewhere. That's what makes nVidia the safe choice for most people, even if it isn't always the best. You really, truly have to look at what your applications want and research to see what's supported. I know that isn't a concrete answer, but the reality is that every application is different. In some cases, a GPU makes literally no difference at all (e.g. Luminar AI) and in some, like Resolve Studio, can send quite a bit of work to the GPU.

Unfortunately, GPUs are in the same uncomfortable period right now that 3D Graphics accelerators were for gamers 30 years ago (Remember Glide? S3D? PowerSGL?). There's a bunch of weird and competing toolkits and capabilities and nobody is bothering to share anything, although AMD is standing around with its hands in its pockets croaking about OpenCL, which is generally seen as the lesser stepchild to CUDA in every way, shape and form.

You know the laptops with discrete CPUs are heavy pigs so nVidia is not really an option.

I'm not finding the right Compute data for Xerxes vs. Arch in the mobile "CPU" packages; maybe you know where to look.

I'm not finding the right Compute data for Xerxes vs. Arch in the mobile "CPU" packages; maybe you know where to look.

Mercutio

Fatwah on Western Digital

The information may simply not exist, especially if you're zeroed in on DxO or Topaz as the tools you might be concerned with. The only generalization I know to be true is that there seems to be a significant performance improvement for between the 4060-series nVidia and the 4070-series in content creation tools. Puget Systems more or less only offers workstation benchmarks for Adobe BS + Resolve. It might be able to answer better in general terms. You can probably look at SpecFP benchmarks to get an idea of the generic computing power of a particular GPU, but even that might not be a helpful measurement if you pick something that isn't optimized for your applications of choice.

I have discovered that a 155H laptop can be had optionally with the 4050 mobile GPU. That implies the Arch GPU in the iNtel CPU is rather weak. I suppose it is better than the Xe in the older CPU, but probably not worth buying a 14th gen so soon after the 13th gen. I wanted to buy the last possible 28W laptop in that category since the Style seeemd to be taking over, but I guess not yet. If they continue the mass increase it will be pointless.

Mercutio

Fatwah on Western Digital

iGPUs are always going to be about getting to some minimal level of capability, but for my purposes, anything that has the Codec feature set of Arc or Xe is giving me something that nVidia doesn't. It's the same thing with the Vega cores on AMD in integrated vs discrete hardware.

Mercutio

Fatwah on Western Digital

It looks like vanilla 4070s are getting down to reasonable prices. I know that's because of dwindling supply, but I still see it as good news. The A750 and A770 are likewise seeing meaningful discounts; they're about $50 cheaper.

Supposedly Battlemage will hit 2nd half of 2024 and nVidia is talking about releasing 5090 SKUs before Xmas, which is thought to be a response to AMD's next gen compute GPUs and the coincidence that is that 5090s will be made with the same cores as the next round of datacenter products.

Supposedly Battlemage will hit 2nd half of 2024 and nVidia is talking about releasing 5090 SKUs before Xmas, which is thought to be a response to AMD's next gen compute GPUs and the coincidence that is that 5090s will be made with the same cores as the next round of datacenter products.

Mercutio

Fatwah on Western Digital

Apparently not until 2025.

I surely have no need to play the Battlelmage in 2024 (or ever  ). I'm just wondering what will better than what I have in the 300W power range and strictly 3 slots, especially if the Zen 5 is a drop-in replacement.

). I'm just wondering what will better than what I have in the 300W power range and strictly 3 slots, especially if the Zen 5 is a drop-in replacement.

Mercutio

Fatwah on Western Digital

The leaked specs seem to suggest Intel and AMD are both trying to offer things in what nVidia calls midrange, rather than making furnace-grade GPUs that exceed reality and all possible budgets; Battlemage is supposedly going to top out at that 300W sweet spot.

I swapped a former student my RX6650 for a five-days-out-of-the-box A770 last night. I haven't gotten to do anything interesting with it yet, but getting a 16GB card means that it'll have greater longevity for me than the old one did.

I swapped a former student my RX6650 for a five-days-out-of-the-box A770 last night. I haven't gotten to do anything interesting with it yet, but getting a 16GB card means that it'll have greater longevity for me than the old one did.

jtr1962

Storage? I am Storage!

Speaking of that I was in MicroCenter the other day and saw what I thought was a heat exchanger for a small air conditioner inside one of their display computers. I think it was an RX7900 video card. So much for more efficient processors. Unless I needed a space heater, I'd never run one of those. Only saving grace is when it breaks I'd have a nice heat sink for my peltier module projects.... rather than making furnace-grade GPUs that exceed reality and all possible budgets ...

If we're going to continue down this path, maybe make these GPUs water-cooled, and have a hot water tank nearby. At least the waste heat will go towards something useful. I recently installed a 4 gallon hot water heater under my kitchen sink so I can turn off my very wasteful oil furnace during the eight warmest months. To shower I'll just kick it on about 15 minutes before showering, then turn it off again when I'm done. Anyway, the heater has a 1400W heating element. I figure a 300W GPU could do almost as good a job. It would just take two hours to recover if I used all 4 gallons, versus about 30 minutes now. With my hot water usage, that's fine. Normally I just have a few dishes at a time to wash.

Mercutio

Fatwah on Western Digital

The trend is definitely entering the space heater range. I roll my eyes every time Intel releases a new desktop CPU. Apparently i9s have been running on unbounded power consumption, even on E-cores where their contribution to overall performance is literally meaningless. High end nVidia is in the same boat. I get how they're more powerful than anything else that we'd stick in a PC, but what's the use case for those cards, even for gaming?

I suppose I do see a difference for workstation loads. If you're doing high precision visualization work or making graphics for a Star Wars movie, fine, great. Use all the two kilowatt/chassis PCs you need. Just don't sell every Tom, Dick and Harry a 400W CPU with a 700W GPU and call that "mainstream."

I suppose I do see a difference for workstation loads. If you're doing high precision visualization work or making graphics for a Star Wars movie, fine, great. Use all the two kilowatt/chassis PCs you need. Just don't sell every Tom, Dick and Harry a 400W CPU with a 700W GPU and call that "mainstream."

As you know, power is a real limit for many home users in the states. You can't get 2kW from NEMA 5-15 and most 1500VA UPS are actually in the 1000W range on battery. I suppose there are some options for NEMA 5-20, but that usually requires rewiring and a permit.Speaking of that I was in MicroCenter the other day and saw what I thought was a heat exchanger for a small air conditioner inside one of their display computers. I think it was an RX7900 video card. So much for more efficient processors. Unless I needed a space heater, I'd never run one of those. Only saving grace is when it breaks I'd have a nice heat sink for my peltier module projects.

If we're going to continue down this path, maybe make these GPUs water-cooled, and have a hot water tank nearby. At least the waste heat will go towards something useful. I recently installed a 4 gallon hot water heater under my kitchen sink so I can turn off my very wasteful oil furnace during the eight warmest months. To shower I'll just kick it on about 15 minutes before showering, then turn it off again when I'm done. Anyway, the heater has a 1400W heating element. I figure a 300W GPU could do almost as good a job. It would just take two hours to recover if I used all 4 gallons, versus about 30 minutes now. With my hot water usage, that's fine. Normally I just have a few dishes at a time to wash.

I'm not sure how often both a CPU and GPU are at 100%, but there are other devices that use significant power, especially the display.

The 7900XT is not the biggest power hog compared to nVidias. One of the advantages of the powerful water-cooled GPUs is that the card will be smaller and not encroach on other slots as much. Of course I'm hoping that the next gen are more efficient, but I can live with about 300W from a GPU because it's not operating at full power too long or often. I figure that a 300W GPU and 200W+ Ryzens CPU will result in a basic system with about 700W max load at the outlet. I'd add about 12W/hard drive in NAS units and about 75W for an LED display.

jtr1962

Storage? I am Storage!

Yeah, I'm getting the impression we're fast approaching a time where a PC will need to be on its own circuit breaker, much like a larger air conditioner.As you know, power is a real limit for many home users in the states. You can't get 2kW from NEMA 5-15 and most 1500VA UPS are actually in the 1000W range on battery. I suppose there are some options for NEMA 5-20, but that usually requires rewiring and a permit.

I'm not sure how often both a CPU and GPU are at 100%, but there are other devices that use significant power, especially the display.

The 7900XT is not the biggest power hog compared to nVidias. One of the advantages of the powerful water-cooled GPUs is that the card will be smaller and not encroach on other slots as much. Of course I'm hoping that the next gen are more efficient, but I can live with about 300W from a GPU because it's not operating at full power too long or often. I figure that a 300W GPU and 200W+ Ryzens CPU will result in a basic system with about 700W max load at the outlet. I'd add about 12W/hard drive in NAS units and about 75W for an LED display.

Last edited:

ddrueding

Fixture

As summer approaches I've turned the PC down. GPU power limit is now 85% of stock, and CPU has a 200W power limit set in BIOS.

At a friends place I used a water-water heat exchanger to use the watercooled PC to pre-warm water heading for the water heater. The reservoir didn't have to be that big (10 liters maybe?) to sink the heat between showers, laundry, etc . When there is a demand for hot water and cold water starts to refill the water heater, the reservoir temperature drops very fast. Main concern is condensation if the incoming water is below the dew point.

At a friends place I used a water-water heat exchanger to use the watercooled PC to pre-warm water heading for the water heater. The reservoir didn't have to be that big (10 liters maybe?) to sink the heat between showers, laundry, etc . When there is a demand for hot water and cold water starts to refill the water heater, the reservoir temperature drops very fast. Main concern is condensation if the incoming water is below the dew point.