ddrueding

Fixture

My first assumption was that there was some equivalency here; with "No Resiliency" meaning RAID0, and the other options stepping through RAID5, RAID6, and RAID1. This was further reinforced when I added a 4th drive to my "no resiliency" pool; the OS asked if I wanted to optimize drive usage and proceeded to balance utilization across all the drive over the course of many hours.

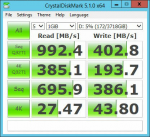

However, performance of the pool is poor:

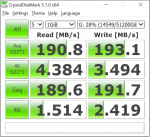

I know that a single one of these drives can do much better.

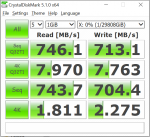

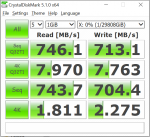

And here is a Windows SoftRAID Stripe of the same drives:

However, performance of the pool is poor:

I know that a single one of these drives can do much better.

And here is a Windows SoftRAID Stripe of the same drives:

Last edited: