ddrueding

Fixture

That's just silly. I like it... A LAN party without the LAN in a single box.

Seriously, if this had existed when I owned the LAN center I would have been all over it.

That's just silly. I like it... A LAN party without the LAN in a single box.

What sort of storage are you looking for? SSD's and/or spinners? What sizes and how many?On to selecting some storage. Eeek what did I just do....

What sort of storage are you looking for? SSD's and/or spinners? What sizes and how many?

Yes, that'd be wonderful if I wanted to use my large RAID-6 array as a scratch disk for other machines. However, I don't.What you really need is for your NAS to support iSCSI and those 10Gb connections we were talking about...:diablo:

What you really need is for your NAS to support iSCSI and those 10Gb connections we were talking about...:diablo:

It's not practical for me to run fiber all over my house, or at least I don't think it is. Can you buy a reel of OM3 fiber and run it like CAT5/6 and terminate it to a wall plate and then use short patch fiber "cables", etc?This is what I might eventually do. I'm likely going to restructure my network to include some localized 10Gb and consider using iSCSI.

It's not practical for me to run fiber all over my house, or at least I don't think it is. Can you buy a reel of OM3 fiber and run it like CAT5/6 and terminate it to a wall plate and then use short patch fiber "cables", etc?

I think it has to be terminated at the SFP+ module though. AFAIK, you can't terminate it at a wall plate and use a short patch "cable". That sort of rules all this out. My main server and backup box will be sitting next to or perhaps on top of each other and will have twinax DACs connecting them.Luckily for me these two servers will sit right next to my NAS for now so I can run twinax or OM2/OM3 cabling at short distances.

I think you can buy reels of OM2/OM3 but I don't quite know the cost of the equipment to terminate and test the connection ends.

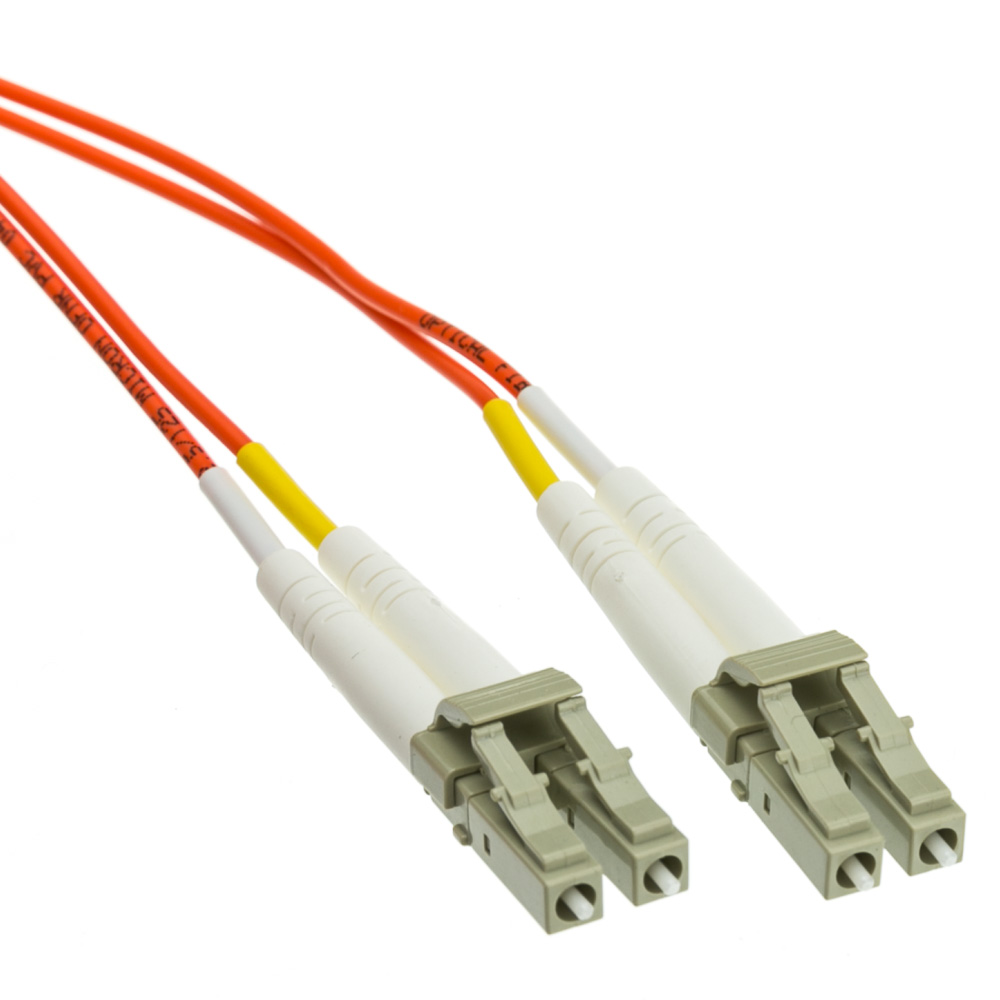

You can use a coupler like this one for the cable inside your wall :I think it has to be terminated at the SFP+ module though. AFAIK, you can't terminate it at a wall plate and use a short patch "cable".

Note to self, do not buy more Toshiba HDDs. Sure enough that wording is on the warranty paper in the 4TB consumer drives I bought. I have no idea if it's in the warranty on the enterprise drives. They don't come with a paper.

I think it has to be terminated at the SFP+ module though. AFAIK, you can't terminate it at a wall plate and use a short patch "cable". That sort of rules all this out. My main server and backup box will be sitting next to or perhaps on top of each other and will have twinax DACs connecting them.

Oh my...so they offer store credit to their own retail Toshiba store that's tied to your email account so you can't even sell it?!?

Well, you're a warranty writer's dream. :rofl:I don't know if I've ever RMAed anything. Isn't it almost always a waste of time?

dd, why are you looking at UnRAID as opposed to Windows Server or ESXi, two products for which you have vastly more experience? What's it giving you? I briefly considered an UnRAID system last time I changed my storage server but in the end I rejected it because of its limited support for addressable storage devices.

So, I'm pretty sure something is missing here.

View attachment 1043

An my first v1 E5-26xx build grinds to a halt. Oh, I have all the pieces, just some of them aren't usable. Minor detail...

Nah, I think they used a chisel. It was from these guys. I sent them a polite nicely worded message demanding an intact replacement or a refund.Umm...did they use a crowbar to remove it?! Which vendor was this one from?

Haven't used eBay in ages. Every once in a while I'm tempted, then someone posts something like this. Occasionally I'll take my chances with with someone who isn't at least "fulfilled by Amazon" on their platform, but that is as risky as I'll get.

All of eBay's protections now side heavily with the buyer, it's hard to get burned as a buyer. The seller is sending me out an intact replacement CPU today. I've got a damaged or incorrect Blu-ray here or there, like movie that wasn't actually the 3D version that was listed. The sellers go out of their way to avoid negative feedback. The return shipping label is pre-paid. Ultimately, if the seller doesn't accept returns and you get a damaged / broken / incorrect item PayPal will refund your money.Haven't used eBay in ages. Every once in a while I'm tempted, then someone posts something like this. Occasionally I'll take my chances with with someone who isn't at least "fulfilled by Amazon" on their platform, but that is as risky as I'll get.

My stuff from the same seller also came yesterday. I didn't open any of the boxes yet to open them since I was focused on building up the v1 E5-26xx system.I haven't used it since ~2007 when I bought something silly but I placed an order Monday morning for two Intel server cases, two air ducts, four heat sinks, two IO shield plates, and two intel RMM4 remote management ports. They all got delivered this morning with no issues and all where NIB as-listed on eBay. They even combined the shipping rather than charging me separate power item.

It's my experience that EVGA's modular cables aren't even compatible across product lines.

That's probably because neither is making their own supplies, so the cables / connectors are whatever the OEM for the various models is using.Indeed. Same with Corsair.