You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ryzen

- Thread starter time

- Start date

Mercutio

Fatwah on Western Digital

There's another round of "high end Intel CPUs are melting" talk making the tech rounds today. 13th and 14th gen i9s are still dying from poor voltage regulation that can likely be attributed to poor communication of safe voltage limits on Intel's part, even though it is blaming motherboard OEMs for the actual issues.

In other words, if that 14900K needs to run so damned hot to get crazy benchmark number that it's breaking down the CPU itself to get there, and the mitigation is to limit voltage such that they also lose their performance advantage, which means that they're getting way too hot for basically no good reason.

Slightly lame USB controllers and finicky RAM compatibility suddenly doesn't seem so bad.

In other words, if that 14900K needs to run so damned hot to get crazy benchmark number that it's breaking down the CPU itself to get there, and the mitigation is to limit voltage such that they also lose their performance advantage, which means that they're getting way too hot for basically no good reason.

Slightly lame USB controllers and finicky RAM compatibility suddenly doesn't seem so bad.

It looks like a cooling solution issue that might require redesign of the CPU package and socket. It always seems strange to me that cooling is from the top cover only and there is none below. How is the cooling done for the 350-400W XENON and EPYC server CPUs that run for years straight?

Those Xeon and Epyc chips have a lot more surface area to spread the heat across because the chips are fairly large. In addition to that, they're typically attached to large heatsinks with ducting that have high volume fans blowing lots of air across them which is why they're often super noisy

ddrueding

Fixture

The gaps accommodate surface mount components around the edge. That heatspreader is also so thick that removing it allows a massive improvement in cooling.

Intel's heat issues are bad enough that they are now allowing some certified installers to remove the heatsink for the improved direct-die water cooling performance. If my CPU were near thermal throttling during normal use I'd do it myself, but for really spikey loads (like mine) even just an AIO is fine.

Intel's heat issues are bad enough that they are now allowing some certified installers to remove the heatsink for the improved direct-die water cooling performance. If my CPU were near thermal throttling during normal use I'd do it myself, but for really spikey loads (like mine) even just an AIO is fine.

sedrosken

Florida Man

Tiny, useless detail, but I find it rather impressive that in the time that Intel's changed fonts for etching model numbers on the heat spreader some two or three times that I know of, AMD seems to have kept the same lettering from at least the socketed Athlon days. I'm willing to bet that if they'd been etching rather than engraving the lettering on the K6 line, we might well have seen it then too.

Curious on some thoughts about a server I'm looking to build to replace some old equipment in my homelab.

I've been considering the new AMD EPYC 4464P that uses the socket AM5 like the consumer CPUs. Most that I've read suggests the Epyc 4464P shares a lot of similarity with the Ryzen 9 7900X but with a couple key differences. The EPYC 4464P officially supports ECC RAM (which is something I want) and its TDP is 65W vs 170W from the 7900X. I've heard the 7900X can support ECC but it's unclear if that's certain.

The downside is that the 4464P costs roughly about $70 more than the 7900X which isn't too terrible. Are there other options I should be considering around the same performance, TDP, and ECC support? My use case is run Proxmox and host a bunch of VMs for various homelab purposes. I was looking to pair the 4464P with an Asrock Rack B650D4U motherboard because it has several key things I'm looking for.

I've been considering the new AMD EPYC 4464P that uses the socket AM5 like the consumer CPUs. Most that I've read suggests the Epyc 4464P shares a lot of similarity with the Ryzen 9 7900X but with a couple key differences. The EPYC 4464P officially supports ECC RAM (which is something I want) and its TDP is 65W vs 170W from the 7900X. I've heard the 7900X can support ECC but it's unclear if that's certain.

The downside is that the 4464P costs roughly about $70 more than the 7900X which isn't too terrible. Are there other options I should be considering around the same performance, TDP, and ECC support? My use case is run Proxmox and host a bunch of VMs for various homelab purposes. I was looking to pair the 4464P with an Asrock Rack B650D4U motherboard because it has several key things I'm looking for.

Mercutio

Fatwah on Western Digital

You're losing out on RAM channels and PCI e lanes and I feel like that's a bit regrettable, but if you're homelabbing and just need a VM host, that's a lot of host. I don't think you're going to get that many decent cores on anything else,

You might be able to get more I/O from a threadripper but not at 65W.

You might be able to get more I/O from a threadripper but not at 65W.

I agree the RAM config does look a bit disappointing but given the options and goals, I was trying to find a balance without huge energy draw and also I don't want more noise either. The RAM speed drops if I were to populate all 4 DIMMs so I was debating starting with 2 x 32GB for now. The motherboard spec says "5200 MHz (1DPC); 3600 MHz (2DPC)" and that feels like a significant drop in performance for added more memory.

I didn't need much more IO for what I'm planning to use it for. I will add in one PCIe 10Gb NIC and a couple of NVMe SSDs and that's most of what I need for basic VM and container storage. I'm considering adding in the Crucial T705 4TB PCIe Gen5 NVMe to get started.

This system will get connected to my NAS for bulk storage via the 10Gb NIC. The 16x PCIe will still remain available and I could use that for a storage controller or some kind of NVMe/m.2 breakout board if I want to expand that way.

I didn't need much more IO for what I'm planning to use it for. I will add in one PCIe 10Gb NIC and a couple of NVMe SSDs and that's most of what I need for basic VM and container storage. I'm considering adding in the Crucial T705 4TB PCIe Gen5 NVMe to get started.

This system will get connected to my NAS for bulk storage via the 10Gb NIC. The 16x PCIe will still remain available and I could use that for a storage controller or some kind of NVMe/m.2 breakout board if I want to expand that way.

Mercutio

Fatwah on Western Digital

The write endurance on that Crucial drive looks pretty low to me (2400TB), but I don't know how static your VMs will be, and of course it is very fast in a way that you'll surely appreciate. Overall that looks like a solid deployment plan.

What alternative NVMe's should I be considering? I looked at the Sabrent Rocket 5 and it's also rated at 2400TB endurance. The Corsair MP700 is higher at 3000TB but it's also a bunch more money. Samsung only has a 990 EVO at PCIe 5.0 and it's much slower and half the size so I didn't consider it. I probably don't need NVMe 5.0 and may not even notice the difference from a 4.0 device. Also, it appears that Crucial T705 dropped in price since I looked last night.

The workload won't be too write-intensive for what I'm planning. The majority will just be updating containers and local logging/caching with an occasional workload that benefits from the storage IO. Since the storage isn't redundant I consider the deployment expendable anyway. The OS/hypervisor will boot off a different SSD that'll likely be SATA-based since it doesn't need the performance and this NVMe will mainly be for VM deployments. The docker containers will mount their read/write to the NAS which will backup their data and config so in the event of this SSD failing, I can just redeploy containers via a docker compose file after remounting the NAS.

The workload won't be too write-intensive for what I'm planning. The majority will just be updating containers and local logging/caching with an occasional workload that benefits from the storage IO. Since the storage isn't redundant I consider the deployment expendable anyway. The OS/hypervisor will boot off a different SSD that'll likely be SATA-based since it doesn't need the performance and this NVMe will mainly be for VM deployments. The docker containers will mount their read/write to the NAS which will backup their data and config so in the event of this SSD failing, I can just redeploy containers via a docker compose file after remounting the NAS.

Its's just the same as the 7900X other than chipset support and power. If your current equipment is like 3000 series then it will be a big improvement. The Cpu performance was about 10% down from 6000 to 4800. You can get 2x48GB.

I have a couple of the WD RED 4TB NAS SSDs. They are only pcie 3.0, but 5100TBW. Seagate may also make similar SSDs for NAS, which may be good for your workload.

I have a couple of the WD RED 4TB NAS SSDs. They are only pcie 3.0, but 5100TBW. Seagate may also make similar SSDs for NAS, which may be good for your workload.

This should be a huge step up from the system it's replacing. It's a 10+ year old dual socket Xeon E5 2650 V2. The Epyc will have fewer cores but it's so much faster overall and uses a lot less power.

Mercutio

Fatwah on Western Digital

I usually look at SSD capacity and endurance and don't worry to much about R/W performance. Moving around VMs is one of the very small number of use cases where you might actually see something like the rated performance of a PCIe 5 SSD, but in practice I can't tell the difference between a Micron 9300 (PCIe gen 4) and a Gen 3 u.2 P4510 if I'm just moving around RAW files or something.

I do like u.2 as a form factor. I know how cool it is to just have m.2 sitting flush on your motherboard but being able to move the drives away from the CPU / GPU, having a slab of heat sink around the drive and maybe right in front of a chassis fan.

I do like u.2 as a form factor. I know how cool it is to just have m.2 sitting flush on your motherboard but being able to move the drives away from the CPU / GPU, having a slab of heat sink around the drive and maybe right in front of a chassis fan.

I'll spend some more time looking into u.2 drive options. I agree I'd rather not have to deal with the m.2 being heat soaked and throttling.

What do you use for a bridge or "controller" to connect the u.2 to either the m.2 or pcie slot? In the past I've used a simple x4 pcie card but haven't looked into more modern options.

Is the micron 9300 still a decent option? I could get a couple of the 3.8TB and that would be plenty for my needs for a while.

What do you use for a bridge or "controller" to connect the u.2 to either the m.2 or pcie slot? In the past I've used a simple x4 pcie card but haven't looked into more modern options.

Is the micron 9300 still a decent option? I could get a couple of the 3.8TB and that would be plenty for my needs for a while.

Mercutio

Fatwah on Western Digital

I have a couple m.2 to u.2 adapters. They have SFF8643 ports on an m.2-shaped board and I want to say they cost me about $20. It's also possible to get them with a direct-attach u.2 cable on them. Mine are made by Startech.

Mercutio

Fatwah on Western Digital

I forgot to answer about the Micron 9300s: They have been fine for me. Basically I haven't had a U.2 drive fail yet. I don't have tons of them, only about two dozen all told, and only 4 and 8TB models.

Alleged AMD Ryzen 9000 "Zen 5" Desktop CPU Prices Revealed: 9950X $499, 9900X $399, 9700X $299, 9600X $229

Alleged prices of AMD's Ryzen 9000 "Zen 5" Desktop CPUs have been revealed & it may look like the top 9950X chip would top out at $499 US.

The pricing information comes from Anandtech Forum member, Hail The Brain Slug, who allegedly knows someone working at BestBuy.

The info must be accurate then.

The info must be accurate then.

Mercutio

Fatwah on Western Digital

The leaked pricing I saw from a European retailer looks a lot more likely to me. I'm also willing to put $700 toward a 9950X if I have to. I'll be overjoyed if it's actually less at launch.

Does Best Buy sell CPUs now? I had no idea.

Does Best Buy sell CPUs now? I had no idea.

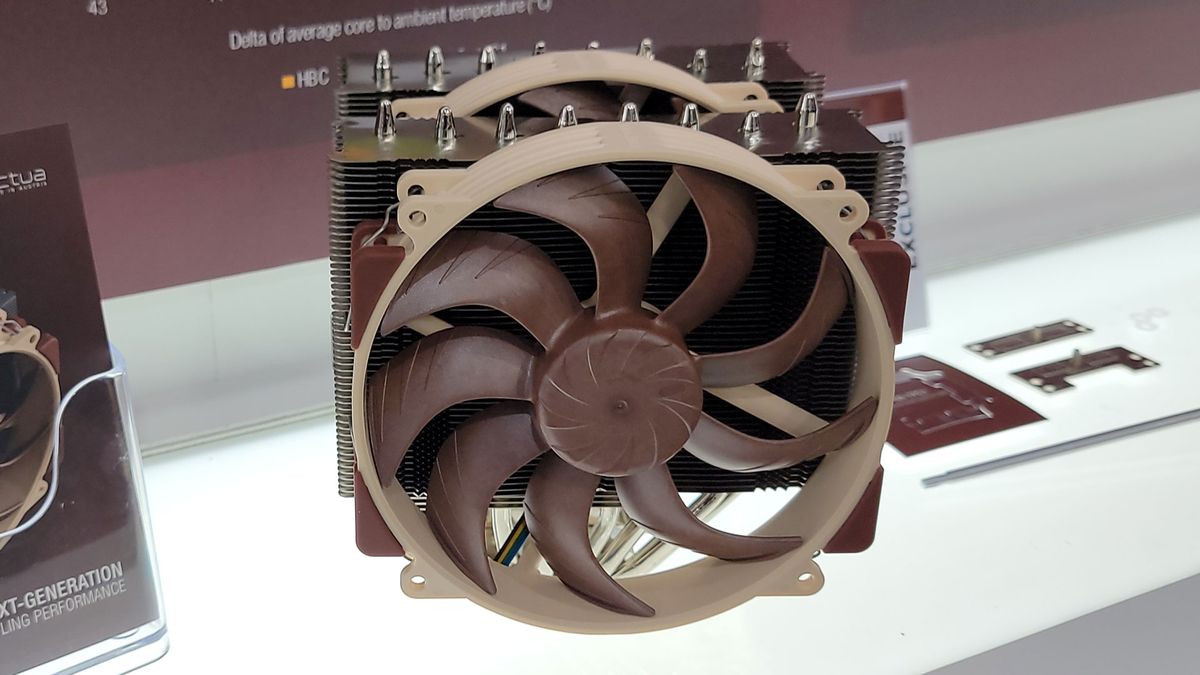

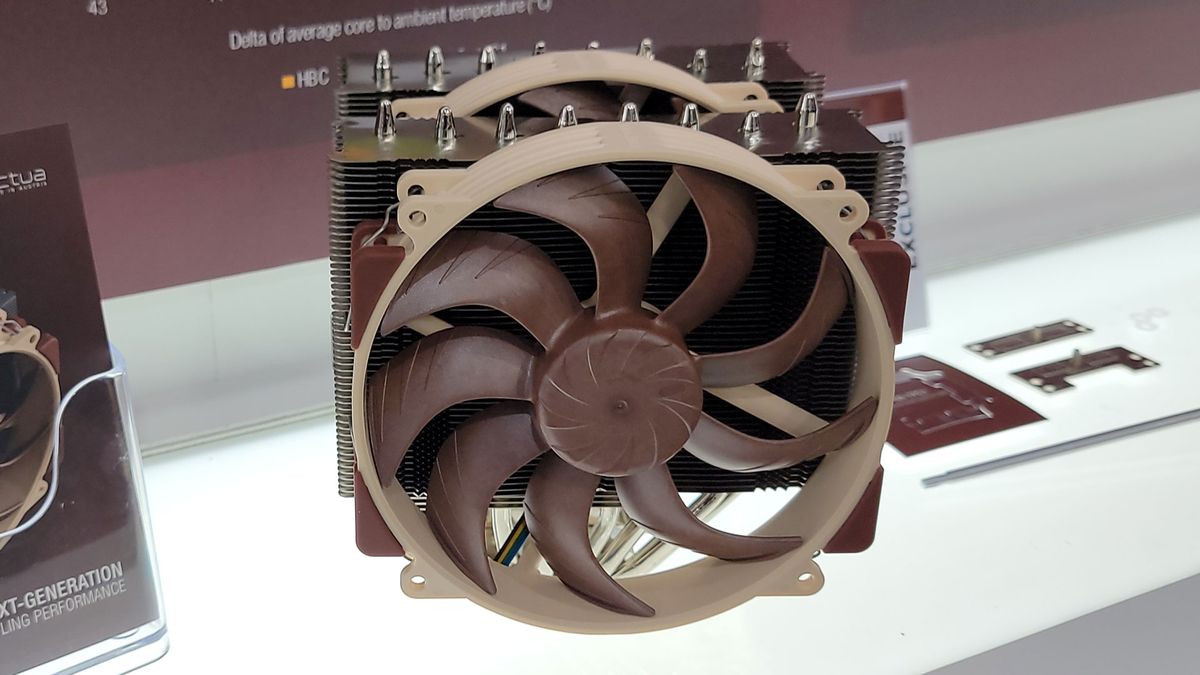

I don't know for sure if those are the only differences. I only found that video yesterday from computex. Like most of these things we'll have to wait for more in-depth reviews on their thermal and noise management to get a better picture of how much better it at all, they end up being from the previous generation.

It looks like there are several differences in fin density, offset and overall size. TheWas neat to see they're trying to account for the odd shapes of the heat spreader from both AMD and Intel.

I'm no big fan of AIOs I do prefer a HSF combo over them.

Noctua NH-D15 G2 LBC https://faqs.noctua.at/en/support/solutions/articles/101000526921

Would be the best one for AM4/AM5.ddrueding

Fixture

I'd hold off just a bit, it seems Noctua missed a potential rattle in the G2. Currently offering full refunds. They are handling the situation in the best way possible, and are still the go-to in my opinion, but I'd just wait until they are shipping units where this issue is resolved.

www.tomshardware.com

www.tomshardware.com

Noctua responds to complaints of ‘rattling’ noise affecting its new NH-D15 G2 CPU air cooler

Austrian firm is still analyzing the situation but will agree to full refunds.

Based on the article it reads as a minor noise complaint, which obviously sucks for a new HSF designed to cool quietly. However, they also said they'll give full refunds if it happens and it is likely remediated with a small piece of tape across the fin.

If you order one and it has the issue, you may get it for free.

If you order one and it has the issue, you may get it for free.

Replacing the CPU is PITA enough in my computer case so I was planning to replace both at the same time.

If Noctuas were sold from the US if would be easier, but I suspect that there are many of the faulty coolers that will be around for a while.

I guess we will know in about two weeks how much heat the 9950x actually creates and whether the new D15 is useful. I'm thinking that the contact patch shape may be more important than the other changes.

If Noctuas were sold from the US if would be easier, but I suspect that there are many of the faulty coolers that will be around for a while.

I guess we will know in about two weeks how much heat the 9950x actually creates and whether the new D15 is useful. I'm thinking that the contact patch shape may be more important than the other changes.

Mercutio

Fatwah on Western Digital

Something to keep in mind about air coolers is that they work forever. Maybe you have to swap a fan, but pumps flat out die after a while. This is one of those things that keeps me away from AIOs: I can buy a $300 top end liquid cooler and at best, maybe I get four or five years out of it. Eventually the pump will crap out and I just have to buy a new one. I'd rather not. I'll take the HSF every time. It'll be easy to install and easy to service. That's all I really want to see.

ddrueding

Fixture

Yup. Whenever I make recommendations, it is for HSFs (Specifically the Noctua D-15 if there is budget for it), but I guess I like suffering, as I always go either AIO or full custom water for myself.

Five years is more than most high end builds will ever see and low end builds will be using wimpy CPUs that are fine on air.Something to keep in mind about air coolers is that they work forever. Maybe you have to swap a fan, but pumps flat out die after a while. This is one of those things that keeps me away from AIOs: I can buy a $300 top end liquid cooler and at best, maybe I get four or five years out of it. Eventually the pump will crap out and I just have to buy a new one. I'd rather not. I'll take the HSF every time. It'll be easy to install and easy to service. That's all I really want to see.

I understand that individual builders don't want to deal with customers any more than necessary. The last thing you want to receive is a message that the cooling system is leaking fluid.

sedrosken

Florida Man

Yeah, no, I flirted with liquid cooling, but I quickly went back. The juice is simply not worth the squeeze. I'd much rather enforce sane power limits and use a modest HSF like the Thermalright Phantom Spirit I recently upgraded to. Then again, I don't have much use for the kind of rigs that usually necessitate a high end liquid setup anyway. I doubt I'll ever need much more than a midranger for anything.

Mercutio

Fatwah on Western Digital

I'm a big fan of the Thermalright Peerless Assassin for midrange builds. It's about 85% of what a NH-D15 is at about 1/3 the cost.

My five year old high end build will almost always get gifted to someone else. Probably not my Threadripper, but for example my ~10 year old X99 system is still living a healthy life as a modest gaming PC. I might even be willing to attribute the long and healthy life of that computer to having a quality HSF in the first place.

Five years is more than most high end builds will ever see and low end builds will be using wimpy CPUs that are fine on air.

My five year old high end build will almost always get gifted to someone else. Probably not my Threadripper, but for example my ~10 year old X99 system is still living a healthy life as a modest gaming PC. I might even be willing to attribute the long and healthy life of that computer to having a quality HSF in the first place.

jtr1962

Storage? I am Storage!

I have used liquid cooling-for thermoelectric projects. I even made my own copper water blocks. I'm able to keep temps about 1.5°C above the inlet water temps with a ~100W heat load. Using tap water in winter, I can get "hot" side temps of around 5°C. With thermoelectrics, every degree you lower hot side temps gives you about 0.70° lower cold side temps with a single stage, about 0.5° with two stages. That's how I got my temperature test chamber to approach -60°F. I may build a new version when I have the time using vacuum insulation panels and three stages. This might get me under -100°F, which would let me test stuff which is used in Antarctica.

Don't much see the point for CPUs. I could use an old A/C evaporator and pump to recirculate the water, maybe get a temperature rise of 2°C for every 100 watts, but that's overkill. The goal is to keep the CPU under maybe 60°C. Air cooling generally works for that. The setup I described might be useful for a 2 kW CPU. Thankfully we haven't reached that yet, but at the rate Intel is going you never know.

Don't much see the point for CPUs. I could use an old A/C evaporator and pump to recirculate the water, maybe get a temperature rise of 2°C for every 100 watts, but that's overkill. The goal is to keep the CPU under maybe 60°C. Air cooling generally works for that. The setup I described might be useful for a 2 kW CPU. Thankfully we haven't reached that yet, but at the rate Intel is going you never know.

jtr1962

Storage? I am Storage!

Same here. 65W TDP is plenty for my needs.Then again, I don't have much use for the kind of rigs that usually necessitate a high end liquid setup anyway. I doubt I'll ever need much more than a midranger for anything.

I had a couple systems for 4-5 years and a laptop for even longer, but my upgrade cycles are shorter now like they were prior to Gulftown. Nowadays it's all e-wasted in a few years. It's too much of a security hassle to re-home them.My five year old high end build will almost always get gifted to someone else. Probably not my Threadripper, but for example my ~10 year old X99 system is still living a healthy life as a modest gaming PC. I might even be willing to attribute the long and healthy life of that computer to having a quality HSF in the first place.